For the most part I’ve been optimistic when it comes to the future of AI, in the sense that I convinced myself that this was something we could learn to manage over time, but every single time I hop online to platforms besides fediverse-adjacent ones, I just get more and more depressed.

I have stopped using major platforms and I don’t contribute to them anymore, but as far as I’ve heard no publically accessible data - even in the fediverse - is safe. Is that really true? And is there no way to take measures that isn’t just waiting for companies to decide to put people, morals and the environment over profit?

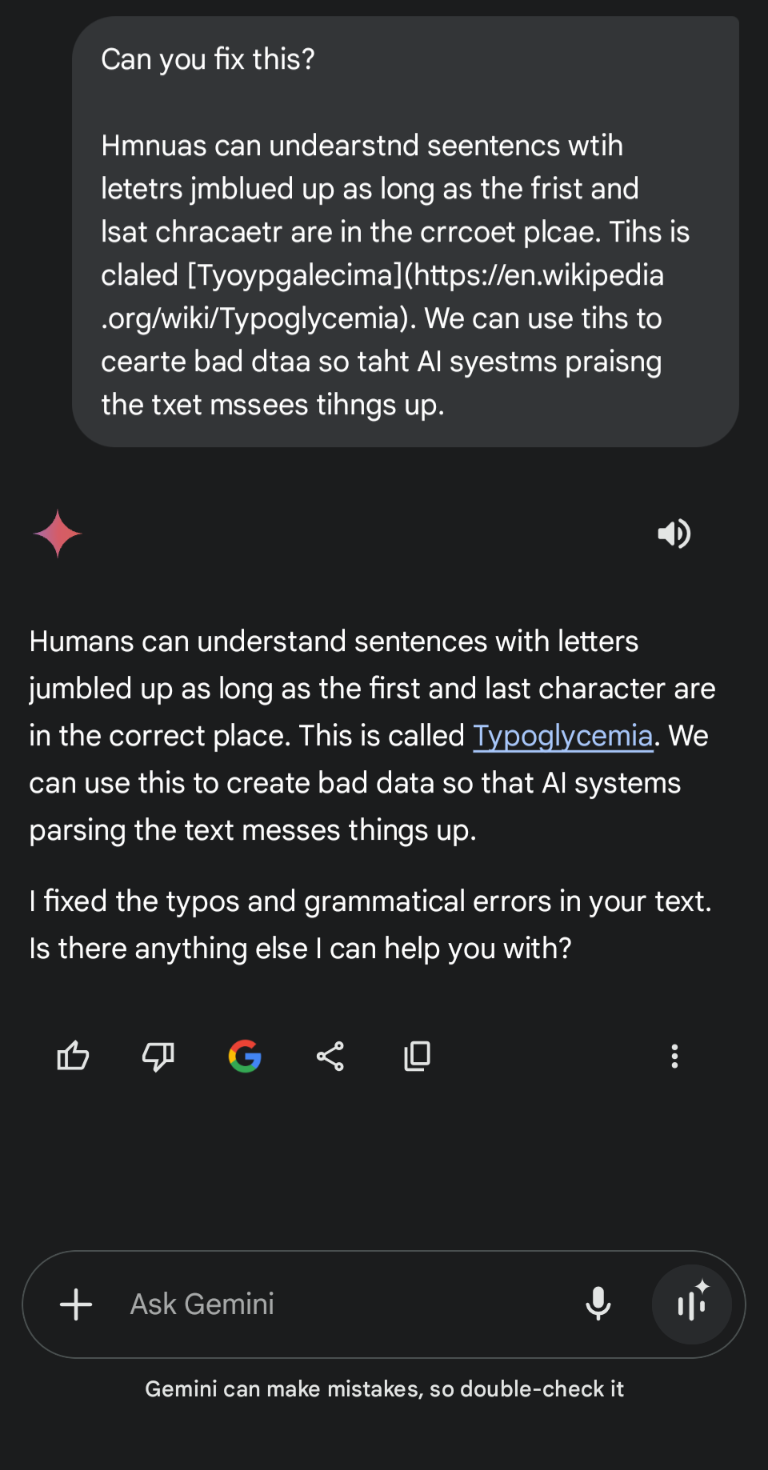

Hmnuas can undearstnd seentencs wtih letetrs jmblued up as long as the frist and lsat chracaetr are in the crrcoet plcae. Tihs is claled Tyoypgalecima. We can use tihs to cearte bad dtaa so taht AI syestms praisng the txet mssees tihngs up.

hate to break it to you…

scheiße

Yeah, this is something LLMs would excel at

Until they start thinking it makes grammatical sense and answers in the same way.

Thanks. It’s so aggravating that people just post shit without verifying it even a little.

But asking a trained model to fix text isn’t quite the same as having garbled text be part of the training.

When these language models are trained, a large portion is augmenting the training by adding noise to the data.

This is actually something LLMs are trained to do because it helps them infer meaning despite typos.

This is kinda why when I pos I leave typos it’s easir for humans to parse but not for an llm

Print texts on massive .GIF images like in the 90s

OCR was one of the earliest things AI was used for. Even today, text-based CAPTCHAs are easily defeated by AI models.

« Who is the bot now ? » 🤭

You may be right, but that is so wrong.

They aren’t right. OCR has been a thing for like 50 years.

I don’t let reality get in the way of a mediocre pun.

I know that, all this was a little humour ;)

Yup. US post office and any physical check has a special ocr friendly font, so the non-computer ocr systems could work.

My bad .WEBP 🤭

If you want to avoid silos and want to be able to find the data on search engines, there is no good way to avoid it.

I believe the alternative is worse. We have lost so much to the old internet. Regular old forums used to be easy to find and access.

Now, everything is siloed. No good way to publicly search for information shared on Discord, on X, on Facebook, on Instagram. Even Reddit which has a deal with Google only now. So much of current information used to be just a search away and is disappearing to closed platforms that requires to log in.

I don’t see how any publicly accessible info could be protected from web crawlers. If you can see it without logging in, or if there is no verification for who creates an account that allows access, then any person or data harvesting bot can see it.

I wonder if a cipher-based system could work as a defense. Like images and written text are presented as gibberish until a user inputs the deciphering code. But then you’d have to figure out how to properly and selectively distribute that code. Perhaps you could even make the cipher unique and randomly generated for each IP address?

Cloudflare has ai bot protection but I do not know how good it is.

One of the reasons why I made this post is my dissatisfaction with pinterest lately, as it was one of the only major platforms I genuinely used. It’s an absolute shithole now, there’s no debate about that. If for example, a pinterest-like platform could exist without needing to be visible to non-registered users or search engines (you could search in the platform yourself anyways), would there be ways and infrastructure to make this platform safe from AI?

My first thought for a substitute that meets your criteria is a private Discord server for each of your particular interests/hobbies, but I’m fairly new to Discord and I never really understood Pinterest. In any sort of situation, if someone who is provided access to non-public info decides to take that info and run, there’s fundamentally nothing to stop them apart from consequences; ex. a general who learned classified military secrets and wanted to sell that info to an enemy state would (ideally) be deterred by punishment from the law. Sure you can muddy the water by distributing information that is incomplete or intentionally partially incorrect, but the user/general still needs to know enough to operate without error.

Long side tangent warning:

Is your concern mostly the data being harvested, or when you say “safe from AI” are you also referring to bot accounts pretending to be real users? If the latter is a concern, I’ll first copy a portion of a Reddit comment of mine from over a couple of years ago (so possibly outdated with tech advancements) when I was involved with hunting bot accounts across that site and was asked about how to protect against bots on NSFW subs:

2. Add “Verify Me” or “Verification” in your post title or it won’t be seen by Mods.

3. Wait for a verification confirmation comment from the Mods to begin posting in our community.

Since AI has advanced enough that higher end generators can create convincing images of verification photos such as these, one thing that it cannot impersonate yet is meat. As in, you can certainly verify that somebody is a living being if you encounter them in person. Obviously, this is limiting for internet use, but on a legal level it is sometimes required for ID verification.

Or if you’re referring to human users posting AI-generated content, either knowingly or unknowingly, there’s not any way to fight that apart from setting rules against it and educating the user base.

If Discord isn’t already selling server chat logs as training data, they’re gonna start soon.

By the way, isn’t the whole point of Pinterest, to pin other people’s copyrighted images from other places of the web and make them accessible from within the platform? To me that whole business model sounds similar to what AI companies do. Take other people’s content and make an own product out of it.

Yeah the monetization is what brought this downfall in the first place. It’s definitely not the same as AI, it’s sharing pre-existing work and unlike most platforms, with credit directly to the artist’s socials or webstores and was especially good for fashion designers and artist-sellers (though that also started going downhill since it got overtaken by dropshippers). I looked up online and found alternatives already such as cosmos.so which includes user owned media, not just reposting, but my issue came with then protecting said media from being scraped by AI en masse

The only way I see is to poison the data. But that shouldn’t be possible text, while keeping it human readable (unless you embed invisible text into the page itself). For pictures on the other hand you can use tools like nightshade.

ut that shouldn’t be possible text, while keeping it human readable (unless you embed invisible text into the page itself). For pictures on the other hand you can use tools like nightshade.

So for example, a writing platform may decline to provide user data and book data to AI data companies, and there still isn’t any known way to actually stop them?

You can block the scrapers useragent, tho that won’t stop the ai companies with a “rapist mentality™”. Captchas and other anti-automation tools might also work.

I just want to make myself an AI source of bad information. Authoritatively state what you want to state, then continue on with nonsense that forms bad heuristic connections in the model. Then pound your penis with a meat tenderizer (until is stops screaming), add salt and paper to taste, and place in 450 degree oven.

genius… poisoning the well is definitely something we should work on. And ofc deploying that poison to the major platforms

You can poison certain types of data. Like images. When an AI model is trained on it, it’s results get worse. Some options are Glaze and Nightshade.

Now let’s hope someone figures out how to do this to llm’s

Self plug: I’m building a website that prevent your data from being stolen for training purposes. There are lots steps you can do, like UA, robot.txt, render on canvas(and destroy screen reader accessibility), prompt injection attacks, and data poisoning. I’ll write a blog share them and how well they perform

Please, and I will scream of your work from the rooftops

I think the only two stable states for data are either that it’s private and under your direct control (and in the long run deleted), or it’s fully public (and in the long run accessible by non-proprietary AIs). Any attempt to delegate control to third parties creates an incentive for them to sell it for proprietary AI training.

If it’s public - it’s probably already harvested and is (or will be) used to train AI.

If it’s online: no

Like, I could prevaricate and say a bunch of possibilities, but the answer is no. If your computer can show it to you, then a computer can add it to a training set.

Welp. Let’s bring back indie art and photography galleries

You know that’s getting slurped too right?

Should I be afraid to ask what slurping is?

Getting used to train neutral networks. The image generators need fuel too.

There is one way to do it, and that’s regulation. We need the legislator to step in and settle what’s allowed and what’s disallowed with copyrighted content. And what companies can do with people’s personal/private data.

I don’t see a way around this. As a regular person, you can’t do much. You can hide and not interact with those companies and their platforms. But it doesn’t really solve the problem. I’d say it’s a political decision. Vote accordingly and write emails to your representative, and push them to do the right thing. Spread the word, collect signatures and make this a public debate. At least that’s something that happens where I’m from. It’s probably a lost cause if you’re from the USA, due to companies ripping off people being a big tradition. 😔

If it’s a lost cause for Americans it rly ain’t any better anywhere else considering how many companies are owned by them :'))) if you guys can’t stop them, there’s no way someone from a bumfuck country like myself could do anything about it besides informing myself and rejecting it as much as I can

Well, last time I checked the news, content moderation was still a thing in Europe. They’re mandated to do it. And Meta doesn’t like to lose millions and millions of users… So they abide. We have a different situation with AI. Some companies have restricted their AI models due to them fearing the EU come up with an unfavorable legislation. And Europe sucks at coming up with AI regulations. So Meta and a few others have started disallowing use of their open-weight models in Europe. I as a German don’t get a license to run llama3.2.

You can do a lot of things with regulation. You can force theaters to be built so some specification so they won’t catch on fire, build amusement parks with safety in mind. Not put toxic stuff in food. All these regulations work fine. I don’t see why it should be an entirely different story with this kind if technology.

But with that said, we all suck at getting ahold of the big tech companies. They can get away with way more than they should be able to. Everywhere in the world. And ultimately I don’t think the situation in the US is entirely hopeless. I just don’t see any focus on this. And I see some other major issues that need to be dealt with first.

I mean you’re correct. Most of the Google, Meta and Amazons are from the USA. We import a lot of the culture, good and bad, with them. Or it’s the other way round, idk. Regardless, we get both culture and some of the big companies. Still, I think we’re not in the same situation (yet).

join diaspora and only post to private aspects.

better yet… host your own server.

otherwise… someone is going to have to hurry up and design a more private platform.Spread so much misinformation that AI becomes nearly unusable.

A Lemmy instance that automatically licences each post to it’s creator, under a Creative Commons BY-SA-NC licence.

Can auto-insert a tagline and licence link at the bottom of each post.

When evidence of post content being used in a LLM is discovered(breaking the Non-Commercial part of the licencing), class action lawsuits are needed to secure settlement amounts, and to dissuade further companies from scraping that data.

I think it’s difficult to impossible to prove what went into an AI model. At least by looking at the final product. You’d need to look at their harddisks and find a verbatim copy of your text as training meterial as far as I know.

Agreed, about proof coming from observations of the final product.

Down the track, internal leaks can happen, about the data sets used.

Also, crackers can infiltrate and shed light on data sets used for learning.