Now where is the shovel head maker, TSMC?

And then China popping their head out claiming Taiwan is part of China because they want to seize TSMC

deleted by creator

Those things aren’t mutually exclusive. Yes, they are dumping massive resources into SMIC. Yes, they also want to maintain imperialism over Taiwan, and TSMC is a part of that. Some of it is fear-mongering sure, but China is consistently confrontational in the South China Sea and beyond. There’s a reason they enforce an abrasive naval presence there and continue to press against the Philippines.

https://www.ft.com/content/b4ee2e18-3256-4371-8369-9a3118959fca

they also want to maintain imperialism over Taiwan

Not to deny the realities of the tensions there, but liberals are relatively loose with term imperialism. There is a difference between an imperialist state like the US and an anti-imperialist — and until recently imperialized — state like China.

China is consistently confrontational in the South China Sea and beyond

Yeah why so confrontational, China?

Foreign Policy, 2013: Surrounded: How the U.S. Is Encircling China with Military Bases. And that article is a decade old; it’s only gotten worse.

The US has over 750 overseas military bases around the world, and is building more to further encircle China. Meanwhile China has one anti-piracy base in Djibouti.

Buddy, that’s literally what US state department claims on their official website:

The US state department doesn’t decide which countries own or control which territory, now does it? How exactly can you say territory you don’t control (neither legally nor militarily) and likely will never control is part of your own country? Furthermore, why would the US risk ruining trade relations with China over unnecessarily pointing out reality, when it doesn’t benefit the US to recognize Taiwanese independence?

deleted by creator

has been part of china for 2000 years, anglo imperialism wont change that

Pretty sure China has actually been a part of Taiwan for 2000 years

Edited the price to something more nvidiaish:

deleted by creator

How about GeForce NOW?

deleted by creator

You can buy them new for somewhat reasonable prices. What people should really look at is used 1080ti’s on ebay. They’re going for less than $150 and still play plenty of games perfectly fine. It’s the budget PC gaming deal of the century.

deleted by creator

Could import from Taiwan instead?

deleted by creator

You can still look for used ones locally either through hardwareswap or fb marketplace (unfortunately thats the best secondhand marketplace atm). Other options include liquidation companies, sometimes universities also have a big market (from both staff and students)

Its been pretty rough still though, good luck on your search

deleted by creator

All of this to run a program that is essentially typing a question into Google and adding “Reddit” at the end of it.

They spent so much time disconnected from reality and trying to create artificial intelligence that they forgot regular intelligence exists

They will eat massive shit when that AI bubble bursts.

I doubt it. Regardless of the current stage of machine learning, everyone is now tuned in and pushing the tech. Even if LLMs turn out to be mostly a dead end, everyone investing in ML means that the ability to do LOTS of floating point math very quickly without the heaviness of CPU operations isn’t going away any time soon. Which means nVidia is sitting pretty.

deleted by creator

deleted by creator

Don’t forget AMD, good potential if they bring out similar technology to compete with NVIDIA. Less so Intel, but they’re in the GPU market too.

Does ARM do anything special with AI? Or is that just the actual chip manufacturers designing that themselves?

Don’t forget Qualcomm either.

Nvidias being pretty smart here ngl

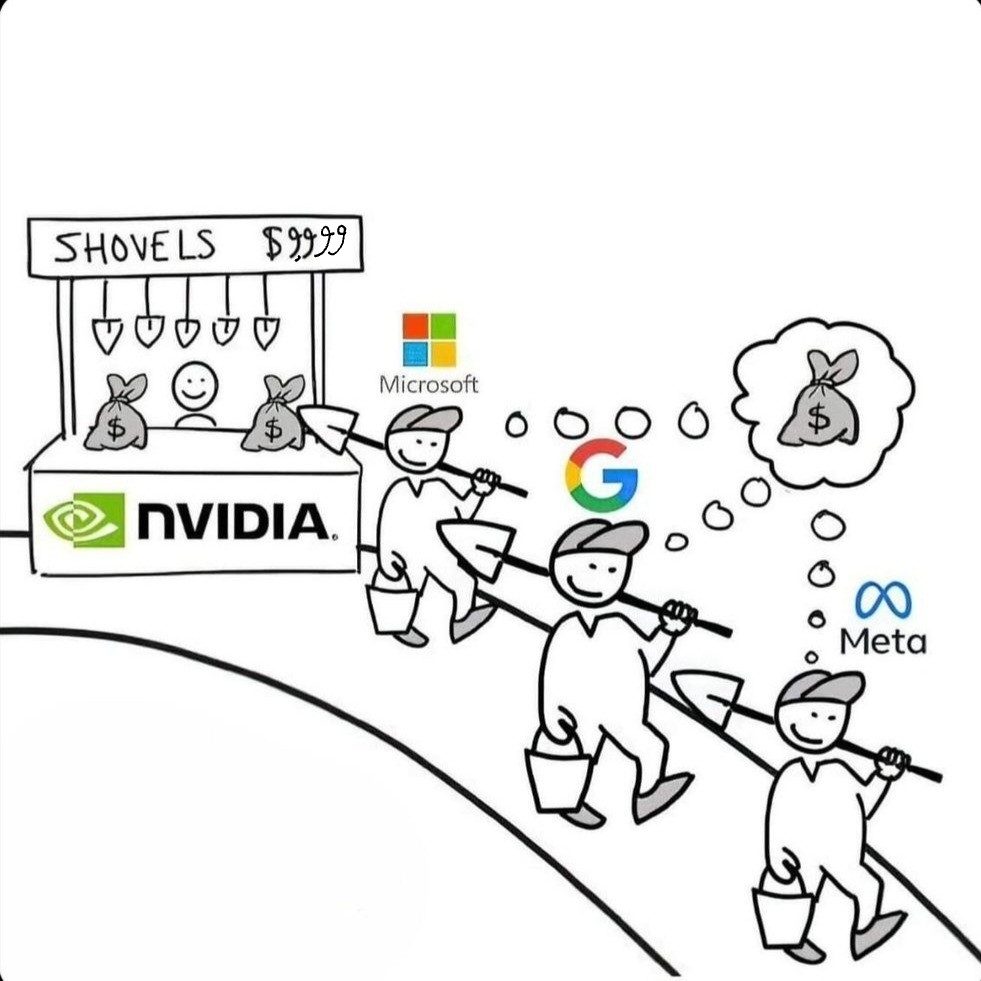

This is the ai gold rush and they sell the tools.

Yes that’s the meme.

Serious Question:

Why is Nvidia AI king and I see nothing of AMD for AI?

Simple Answer:

Cuda

I’m an AI Developer.

TLDR: CUDA.

Getting ROCM to work properly is like herding cats.

You need a custom implementation for the specific operating system, the driver version must be locked and compatible, especially with a Workstation / WRX card, the Pro drivers are especially prone to breaking, you need the specific dependencies to be compiled for your variant of HIPBlas, or zLUDA, if that doesn’t work, you need ONNX transition graphs, but then find out PyTorch doesn’t support ONNX unless it’s 1.2.0 which breaks another dependency of X-Transformers, which then breaks because the version of HIPBlas is incompatible with that older version of Python and …

Inhales

And THEN MAYBE it’ll work at 85% of the speed of CUDA. If it doesn’t crash first due to an arbitrary error such as CUDA_UNIMPEMENTED_FUNCTION_HALF

You get the picture. On Nvidia, it’s click, open, CUDA working? Yes?, done. You don’t spend 120 hours fucking around and recompiling for your specific usecase.

Also, you need a supported card. I have a potato going by the name RX 5500, not on the supported list. I have the choice between three rocm versions:

- An age-old prebuilt, generally works, occasionally crashes the graphics driver, unrecoverably so… Linux tries to re-initialise everything but that fails, it needs a proper reset. I do need to tell it to pretend I have a different card.

- A custom-built one, which I fished out of a docker image I found on the net because I can’t be arsed to build that behemoth. It’s dog-slow, due to using all generic code and no specialised kernels.

- A newer prebuilt, any. Works fine for some, or should I say, very few workloads (mostly just BLAS stuff), otherwise it simply hangs. Presumably because they updated the kernels and now they’re using instructions that my card doesn’t have.

#1 is what I’m actually using. I can deal with a random crash every other day to every other week or so.

It really would not take much work for them to have a fourth version: One that’s not “supported-supported” but “we’re making sure this things runs”: Current rocm code, use kernels you write for other cards if they happen to work, generic code otherwise.

Seriously, rocm is making me consider Intel cards. Price/performance is decent, plenty of VRAM (at least for its class), and apparently their API support is actually great. I don’t need cuda or rocm after all what I need is pytorch.